In the rapidly evolving landscape of artificial intelligence, one architecture reigns supreme: the transformer. Since its introduction, the transformer has revolutionized how machines process and understand data, becoming the backbone of numerous groundbreaking applications. From large language models like GPT-4o and Claude to innovative systems for image generation and speech recognition, transformers are ubiquitous in AI today. This exploration delves into the mechanics behind transformers, their significance in the development of scalable AI solutions, and the exciting future they promise as we continue to push the boundaries of technology.

| Feature/Aspect | Description |

|---|---|

| Transformers | Neural network architecture used for sequence modeling. |

| Common Applications | Language translation, sentence completion, speech recognition, image generation. |

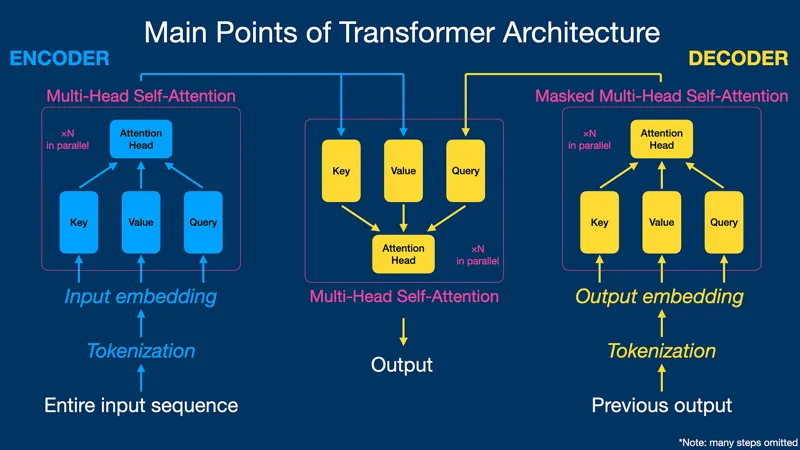

| Key Components | Encoder and decoder with attention layers. |

| Attention Types | Self-attention (within sequence) and cross-attention (between sequences). |

| Significant Models | GPT-4o, BERT, LLaMA, Gemini, Claude. |

| Innovations | GPU advancements, quantization, multi-GPU training, new optimizers like AdamW. |

| Future Trends | Continued use of transformers and potential rise of state-space models (SSMs). |

| Multimodal Models | Handle text, audio, and images, enhancing accessibility for disabled users. |

Understanding Transformers: The Basics

Transformers are like special engines for computers, helping them understand and create language. They are a type of technology called neural networks that can learn from lots of examples. For instance, if you teach a transformer many sentences in English, it can learn how to translate those sentences into other languages like Spanish or French. This ability makes transformers very important for things like chatbots and translation apps.

The cool thing about transformers is that they can look at all the words in a sentence at once, instead of one by one. This means they can remember the context better, like knowing what a story is about by remembering details from earlier. This helps them create smarter responses or translations. Since their introduction in 2017, transformers have become the go-to technology for many language-based tasks.

Frequently Asked Questions

What is a transformer in AI?

A transformer is a neural network architecture designed to process sequences of data, making it perfect for tasks like language translation and automatic speech recognition.

Why are transformers important for AI models?

Transformers are crucial because their attention mechanism allows for efficient training and handling of large datasets, making them the backbone of cutting-edge AI models like GPT-4o and BERT.

What is self-attention in transformers?

Self-attention enables transformers to understand relationships between words in the same sequence, helping maintain context even with long texts.

How do encoder-decoder architectures work?

Encoder-decoder architectures use an encoder to learn data representations and a decoder to generate new content, ideal for translation and summarization tasks.

What are multimodal models?

Multimodal models like GPT-4o can process different types of data, such as text, audio, and images, allowing for diverse applications like video captioning and voice cloning.

What advancements support transformer models?

Recent innovations include advanced GPU hardware, better training software, and techniques like quantization, which help models train faster and use less memory.

Are transformers likely to change in the future?

While transformers currently dominate AI, research into alternatives like state-space models is ongoing, but transformers will remain central for the foreseeable future.

Summary

Transformers are a powerful technology driving many advanced AI models today. They are used in large language models like GPT-4o and LLaMA, as well as in applications like speech recognition and image generation. Transformers work by processing sequences of data, making them great for tasks such as translation and text completion. A key feature is the attention mechanism, which helps the model understand the context of words even if they are far apart in a sentence. As AI continues to evolve, transformers are expected to remain crucial, especially with exciting developments in multimodal models that can handle text, audio, and images.