Phi-4 AI Models: Revolutionizing Multimodal Capabilities

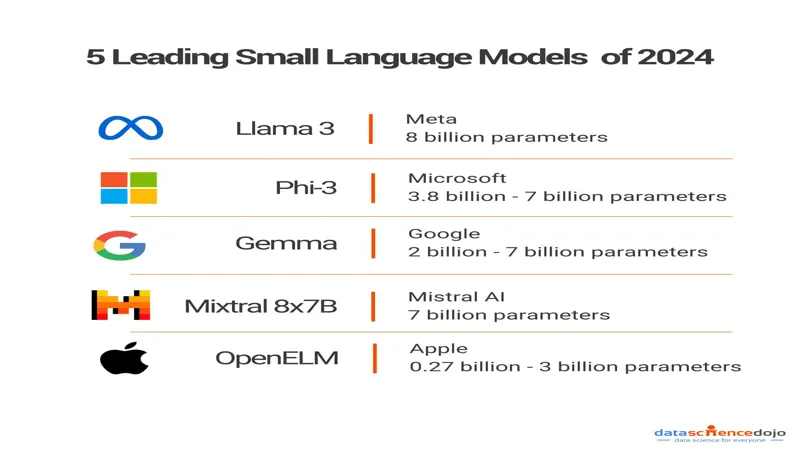

In a groundbreaking move, Microsoft has unveiled the Phi-4 family of AI models, heralding a new era of efficiency and capability in artificial intelligence.Designed to seamlessly process text, images, and speech using significantly less computing power than their predecessors, the Phi-4 models represent a significant leap forward in the development of small language models (SLMs).